Slice AI LLC

Slice AI focuses on enabling large language models (LLMs) to operate with increased autonomy. The objective is to facilitate the development of artificial general intelligence (AGI) by allowing LLMs to learn continuously with minimal supervision.

Our systems allow LLMs to learn from new examples, fostering more independent decision-making.

Contact: Charles@sliced-ai.com

Research

- Memory Retention, Learning Rates, and Rare Memory Injection in LLMs

- Expanding Embedding Spaces

- Exploring Thousands of Inferences on a Single Prompt

Discussions

- Why Don’t Our Heads Explode Thinking About Stop Signs?

- Are Humans Deterministic? Should LLMs Make Spelling Mistakes?

- Strong vs. Weak AI Agents

- LLM Meetings: A Study on Multi-Agent Coherency

- LLM Agent Depth Coherence Study (Incomplete)

- Compounding Reasoning Chains

- The Forever AI Agent

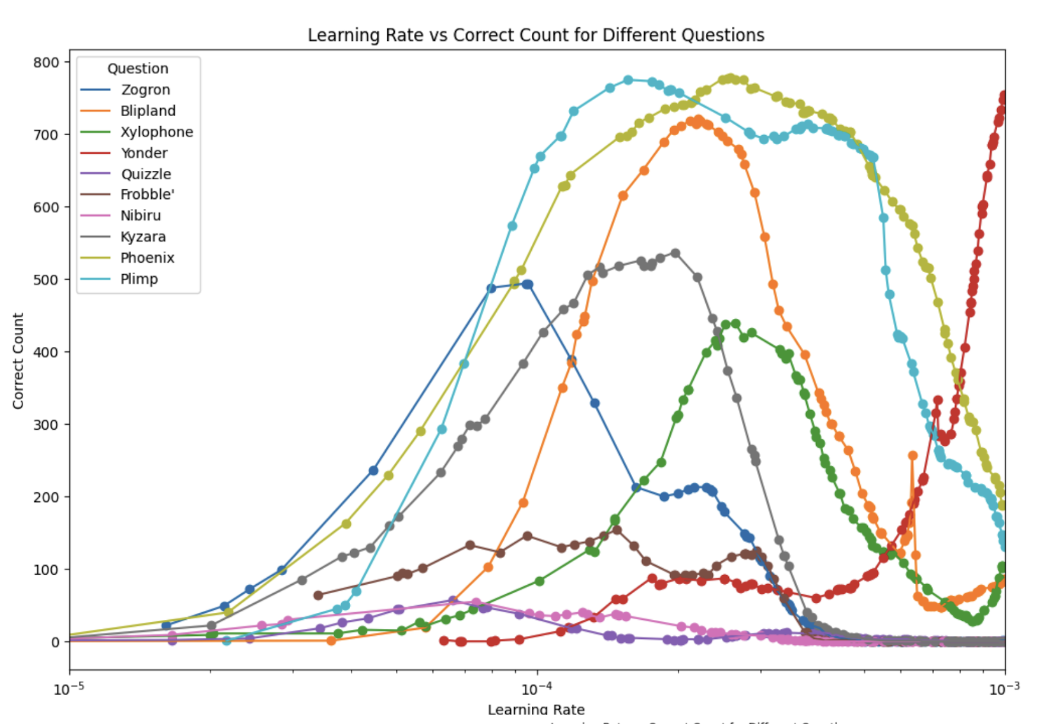

Memory Retention, Learning Rates, and Rare Memory Injection in LLMs

Download "Investigating Learning Rates and Memory Retention"

This research investigates how learning rates affect memory retention in LLMs, revealing significant variations depending on the learning rates used.

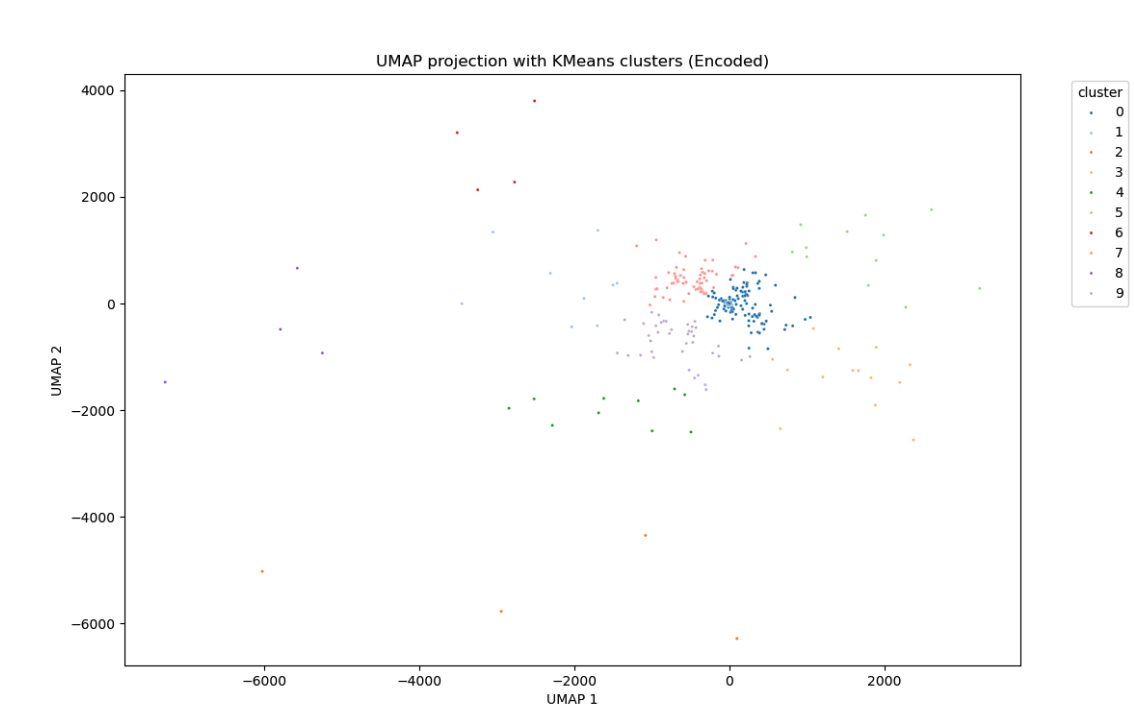

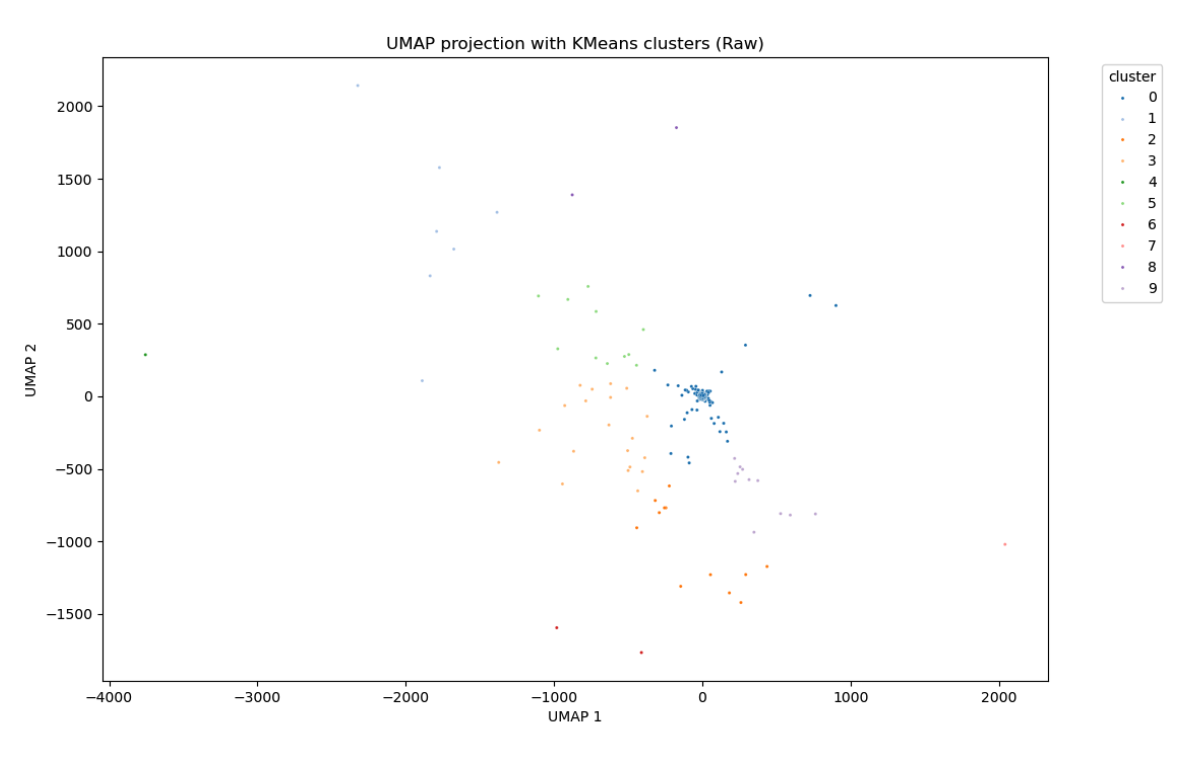

Expanding Embedding Spaces

Download "Expanding Embedding Spaces"

This study explores how expanding embedding spaces improves data retrieval in long-running tasks for LLMs, using autoencoders and progressive training.

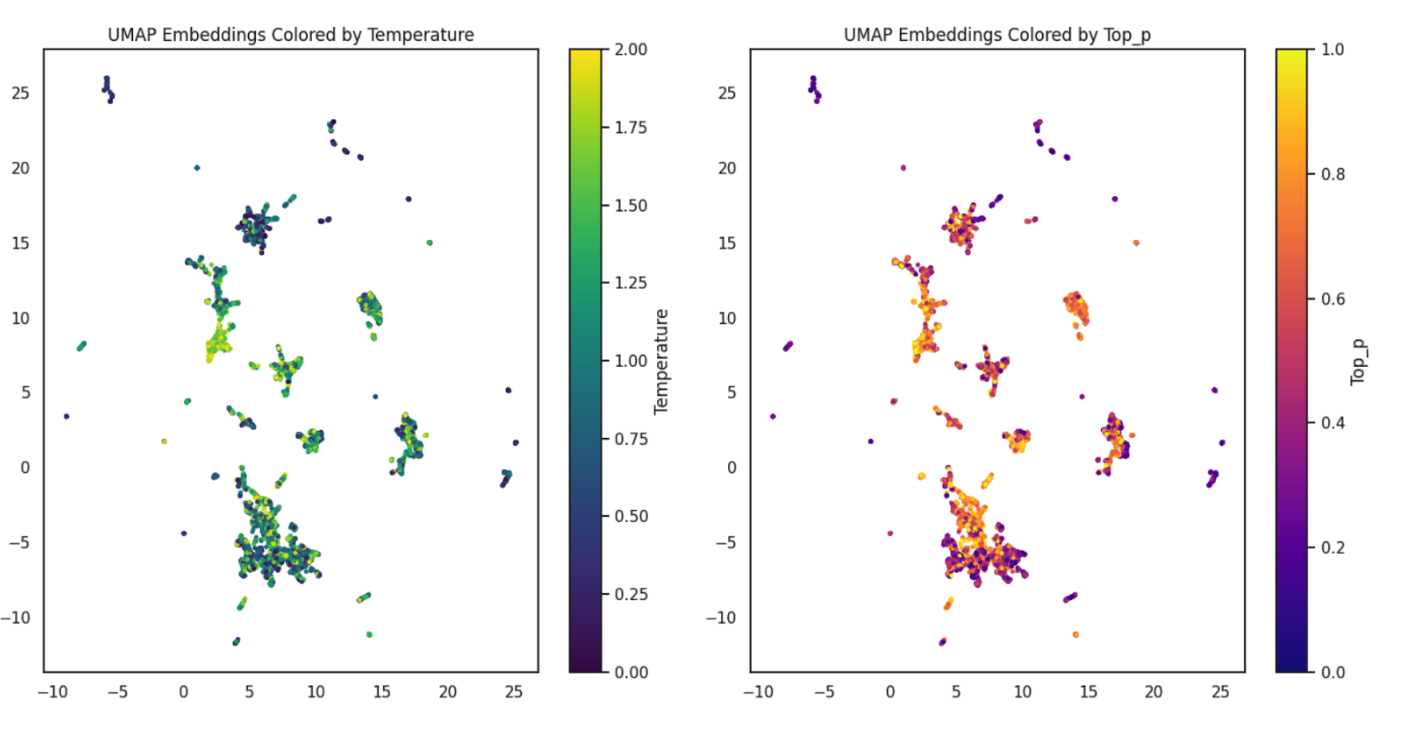

Exploring Thousands of Inferences on a Single Prompt

Download "Exploring Thousands of Inferences on a Single Prompt"

This paper studies how hyperparameters like temperature, top p, sequence length, and token length affect output diversity across thousands of inferences. Despite subtle variations, outputs remain too similar to predict hyperparameters effectively.